Monte Carlo contact's corner

In this page, we collect useful information for Monte Carlo contacts about how to act in McM

Preliminary steps

Let us know your name! When we have the names of all MC subgroup contacts we will create a mailing list.

Register to McM using these instructions (for generator contacts, not as a normal user):

Ask the generator conveners cms-phys-conveners-GEN@cernNOSPAMPLEASE.ch the permission to upload LHE files to EOS disks.

Be subscribed to the following HNs:

to actively use: https://hypernews.cern.ch/HyperNews/CMS/get/prep-ops.html

to ask questions: https://hypernews.cern.ch/HyperNews/CMS/get/generators.html

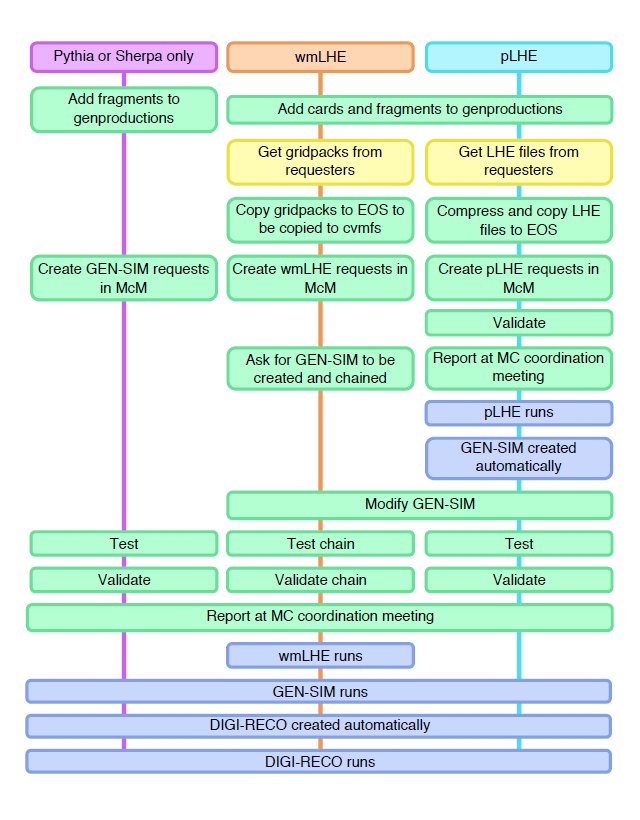

A general flow-chart of MC request submission (courtesy of D. Sheffield)

1- Preparing LHE files

Many generators (POWHEG, MadGraph5_aMCatNLO etc.) use LHE files as a starting point for generation. If you use a generator that does the ME step on the fly (e.g. bare Pythia) just skip this part.

See the dedicated section of this Twiki for Matrix Element generators

If you need help to prepare Madgraph5_aMCatNLO LHE files, you can contact the cms Madgraph team using the generators hypernews.

There are two ways to technically produce LHE files:

In most cases, you should use campaigns called "xxxwmLHE" to request a central production of the LHE. It can also help to keep track of the sample.

Only if this is not possible or analysts provide LHE files directly, you can do it "privately", then you can use campaigns called "xxxpLHE"

P.S: It is strongly recommended to check the LHE files locally before uploading, so that we don"t upload the broken LHE files which cause a lot of problem later in production

xmllint --stream --noout ${file}.lhe > /dev/null 2>&1; test $? -eq 0 || fail_exit "xmllint integrity check failed on ${file}.lhe"

1.1 - Using wmLHE campaigns

Only if you want automatic production of LHE files follow these instructions:

Supported generators for this option are Madgraph5_aMCatNLO (replaces old MadGraph) and POWHEG v2 (if a process is not yet available in v2, we temporarily accept v1)

In order to use this option you need to produce first a "gridpack" (Madgraph5_aMCatNLO) or a executable/grid file tarball (POWHEG). Instructions to produce those can be found at:

Before starting the execution of the gridpack/tarball, run_card.dat or proc_card.dat cards (for Madgraph5_aMCatNLO) or powheg.input cards (for POWHEG) should be uploaded to the following repositories (to do that use instructions here):

Exception: if you use cards provided in the example card repository for MadGraph5_aMCatNLO

or in the example card repository for POWHEG

or in the example card repository for POWHEG , without any changes, there is no need to upload them in the production area

, without any changes, there is no need to upload them in the production areaGenerator conveners must approve the pull request, at that point the cards can be used for production of gridpack/tarball.

When you have the gridpack/tarball, this should be copied to this area of EOS (cmsStage), after creating a proper subdirectory (cmsMkdir):

e.g.

After a few hours these files will be autmatically copied to the cvmfs repository under:

At this point these are usable for externalLHEProducer. See below. It is possible of course to use already existing gridpacks.

1.2 - Using pLHE campaigns

Only if you have produced LHE privately follow these instructions:

Store them in a temporary directory, e.g. tempdir/.

In any CMS release, produce a script while using the following command, which aims at translating the LHE into a EDM roottuple:

change the LHESource PSet to have your files as input (it would be good to run on all of them, if possible, to check for single corrupted files) and run normally.

When running the script with cmsRun, if it goes to the end with no exceptions/errors, your LHE files are validated.

Your LHE files should go in a new directory named as the first available number on /store/lhe. To do this, use the script: GeneratorInterface/LHEInterface/scripts/cmsLHEtoEOSManager.py

. The command format is:

. The command format is:

e.g.

If the compress option does not work, remove it. In particular do not use it if the samples are going to be processed in releases < 5.3.8

If you have a lot of LHE files with a small number of events in them (typical output of batch queue processing) it may be a good idea to first merge them in a single or a few files. To do this, download the script mergeLheFiles.cpp, modify the following line defining the output merged file:

and perform the following actions:

and you will get a single LHE file called out.lhe.

2 - Submitting a request in McM

Complete instructions on how to use this tool are here. These simple actions are needed to submit a sample:

Go to McM

Make sure you understand which campaign you want to use. Every base name (e.g. Summer12, RunIIWinter15 etc.) corresponds to a well-defined release cycle and set of DB conditions

The procedure to follow is different if you have prepared gridpacks/LHE files from the step 1, or if the request does not need a LHE step

2.1 - If starting from LHE files/wmLHE workflows

You must start from a campaign called xxxpLHE or xxxwmLHE. To create a new request in those campaigns you can follow creating instructions, but in practice in most cases it is simpler to clone an existing sample in a campaign:

If the original request is very old, use the button "option reset"

Edit the cloned request

You can check the "Valid" box and set a number of events n, in the range 0 < n <= nTotal. Do it at your own risk, as the validation may crash badly.

If this is a "xxxpLHE" request, set "MCDB Id" (= LHE number on eos, see above), otherwise set 0.

Put the number of events actually available in the above upload, or less. Never not put more than this number, e.g. if you have uploaded 199800 events in LHE files, do not round to 200000

Put the cross-section if people in analysis are supposed to use it! If there are better estimations of total cross-section than the present generator (e.g. at higher QCD orders, or from LHC XS WG etc.), then just put 1.0. Leaving -1 is not OK.

For filter and matching efficiencies always put 1 with error 0. Leaving -1 is not OK.

Set generators (use the json format that you see in the original request)

If this is a "xxxwmLHE" request, put the generator fragment for external LHE production, otherwise leave blank. You can copy it by hand in the big window "Fragment":

Example of xxxwmLHE fragment is here (just change the tarball name, commented link to cards with specific github revision, eventually the nEvents per job, leave the same configuration for the rest). Note that for madgraph_aMC@NLO gridpacks, the git revision information is also written in the gridpack_generation.log inside the tarball if in doubt.:

For time and size per event otherwise just put dummy (= very small) values, e.g. 0.001 s and 30 kB.

Put the dataset name following these rules

Report at the MC coordination meeting (thursday 3pm) that the request is ready (if the request is very urgent tell directly hn-cms-prep-ops@cernNOSPAMPLEASE.ch). The request is accepted (or you will be asked to revise it) and prioritized. At this point production managers will "chain" the request, i.e. the system will create automatically a GENSIM request in the campaign with the same xxxGS name (e.g. RunIIWinterpLHE or RunIIWinter15wmLHE will create an entry in RunIIWinter15GS). This request will not go on automatically so it is up to you to edit the new request:

First of all, put the generator fragment for showering/hadronizing/decaying your MC sample. You can copy it by hand in the big window or you give the name and fill in "Fragment tag" the corresponding package tag in github. If you need to upload a new one in github, follow instructions here. A list of already available fragments is here

. Possible associations:

. Possible associations: Settings in these files should be adapted to your case (flavor scheme, extra weak particles etc.). In particular if you are using jet matching and the gridpack is new (created by yourself) the qCut must be measured (see step 4 on how to measure it)

You should check the "Valid" box and set a number of events n about 30-50.

Put the cross-section if people in analysis are supposed to use it! If there are better estimations of total cross-section than the present generator (e.g. at higher QCD orders, or from LHC XS WG etc.), then just put 1.0 (after hadronization, see step 4 on how to measure it). Leaving -1 is not OK.

Insert sensible filter and matching efficiencies (see step 4 on how to measure them). Leaving -1 is not OK.

Insert sensible timing and size (see step 4 on how to measure them).

Move the request to the next step (chain validation). In the action box close to the request name, click on "chained request", then in the new page that will appear click on "validate chain" in the action box: when this is completed you will receive a mail from McM.

At that point, if you had activated the validation, you should go back to the request, click on the "Select View" tab above and ticking "Validation": a new column will appear with a link to a DQM page (maybe).

If the validation fails, you will be notified by email with the logfile attached. So go back and edit the request to fix it.

In the DQM page, navigate to the proper folder and look in the plots that everything is like you expect and define the request (click "next step" again)

2.2 - If not starting from LHE files

You must start from a campaign called xxxGS, e.g. RunIIWinter15GS. To create a new request in those campaigns you can follow creating instructions, but in practice in most cases it is simpler to clone an existing sample in a campaign:

If the original request is very old, use the button "option reset"

Edit the cloned request

First of all, put the generator fragment for generating your MC sample. You can copy it by hand in the big window or you give the name and fill in "Fragment tag" the corresponding package tag in github. If you need to upload a new one in github, follow instructions here.

You should check the "Valid" box and set a number of events n, in the range 30-50.

Add cross-section (see step 4 how to measure it). Leaving -1 is not OK.

Add filter and matching efficiencies (see step 4 on how to measure them), if unity, put 1 with error 0. Leaving -1 is not OK.

Set generators (use the json format that you see in the original request)

Set time and size per event (see step 4 on how to measure them).

Put the dataset name following these rules

Move the request to the next step (validation) (it's the ">" small symbol besides the request name, in the "view" panel): when this is completed you will receive a mail from McM.

At that point, you should go back to the request, click on the "Select View" tab above and ticking "Validation": a new column will appear with a link to a DQM page (maybe).

If the validation fails, you will be notified by email with the logfile attached. So go back and edit the request to fix it.

In the DQM page, navigate to the proper folder and look in the plots that everything is like you expect and define the request (click "next step" again)

After, report at the MC coordination meeting (thursday 3pm) that the request is ready (if the request is very urgent tell directly hn-cms-prep-ops@cern.ch)

3 - Checking status

To check the status of a submitted request:

Go to the request, click on the "Select View" tab above and ticking "Reqmgr name": a new column will appear with some links ("details", "stats", etc.). Click on the small eye close to the links to see a graph of the sample status.

4 - Additional things

There are some quantities you have to measure so that the production team knows the properties of the request, to go ahead with the central production. Filter efficiency can be measured while running just the GEN step, while the timing and size are the time needed to run one GENSIM event and its size.

To start this procedure go on the "Get setup" button of the request and check that the output is correct (N.B. for this you need to upload the generator decay fragment before!).

Measure filter efficiencies and cross-section

You need this step if:

you want to measure a cross-section after hadronization (for backgrounds, for signal there are precise estimates from the LHC cross-section WG already)

you have filters on final state particles, so filter efficiency is smaller than 1

you have jet matching cuts, so matching efficiency is smaller than 1

If you don't need this step all numbers above are 1, just proceed to "Measure time and size".

Use the script CmsDrivEasier.sh in this way:

e.g.

where n is a sufficient number of events to measure precisely the efficiency, e.g. if the efficiency is expected to be o(1/100) you could use 100000 events. E.g.

In the output look for the string "GenXsecAnalyzer". Below this you have a detailed logging of the needed quantities.

Measure proper value of qCut

You need this step if you have jet matching cuts.

Do all steps of the paragraph above, using a lot of events and a reasonable starting qCut: this should be about 1.5-2 times larger than the ptj or xqcut parameter used in ME generation. You should find a root file in the end in /tmp/<your username>/<request ID>.root . Then download this macro and run in ROOT as:

and run in ROOT as:

test.root will contain a canvas which shows plots of differential jet distributions separated according to the number of original partons in an event. Of course histograms and plots do not match perfectly because additional jets are created by the parton shower: in fact, for the plot corresponding to the i-th jet, you have a sharp drop at pT = qCut of the sample with i-1 partons and a sharp rise of the sample with i partons.

You should check that the TOTAL jet rate distributions appear smooth at pT ~ qCut.

If there is a kink somewhere, try with a larger qCut, if there is a dip try with a smaller one.

Repeat until you find smooth distributions.

Measure timing and size

Use the script CmsDrivEasier.sh in this way:

e.g.

where 30 (or 30/filter efficiency if filter efficiency is not 1) if is usually a sufficient number of events to measure time and size

In the end of the output, you get the needed statistics:

AvgEventTime is the value to fill for time/event (in sec)

Timing-tstoragefile-write-totalMegabytes divided by TotalEvents is the size/event (in MB, in McM you have to change to kB)

Validation failure because of non existing wmLHE dataset

If a GENSIM request is chained to a wmLHE request that was produced far in the past, validation may fail because it is run at CERN, but files of the LHE dataset could have been moved elsewhere in the meantime. In that case you need to request a PhedEx transfer.

Click on "Subscribe to PhEDEx".

On the first box, you will see that dataset. You can put all datasets you need in the same box.

Choose T2_CH_CERN.

Choose AnalysisOps.

Add reason, i.e. For McM validation.

Click Accept.

Please ping prep-ops HN, so we can approve quickly.

Last updated

Was this helpful?